Junko Noguchi, Kanda University of International Studies, Japan

Noguchi, J. (2014). Evaluating self-directed learning skills in SALC modules. Studies in Self-Access Learning Journal, 5(2), 153-172.

Download paginated PDF version

The previous four installments of this column have explicated the redesign process of the Self-Access Learning Centre (SALC) curriculum conducted at Kanda University of International University (KUIS) by the Learning Advisor (LA) team. The topics of each installment are: framework and environment analysis (Thornton, 2013); needs analysis (Takahashi et al., 2013); principles and evaluation of the existing curriculum (Lammons, 2013); and piloting and evaluating the redesigned curriculum (Watkins, Curry, & Mynard, 2014) in accordance with the flow of the curriculum process which has been adapted from the design model of Nation and Macalister (2010). Although the assessment process employed in the pilot was mentioned in the previous installment (Watkins et al., 2014), this column installment will discuss assessment for self-directed learning (SDL) in more detail, focusing on the issue of assessment in SDL in general, what kind of assessment process had been implemented at the SALC curriculum previously, and what kinds of changes have been made as part of the ongoing curriculum renewal project.

Problems with Assessing Self-Directed Learning Skills

One of the prominent issues when assessing SDL skills in KUIS’ SDL modules is how to evaluate unobservable but interpretable skills such as problem-solving skills. If unobservable aspects of students’ SDL skills are to be evaluated, deciding how the evidence of them should be interpreted can be a major obstacle. For instance, if we are to evaluate their logical problem-solving skills by analyzing what students write in their reflective learning journals, there will be numerous issues we need to face. First of all, we do not teach such skills explicitly but rather implicitly by using eliciting questions in our feedback on submitted module. Secondly, in our SDL modules students are learning everything and expressing themselves in their second language and might lack the language necessary to describe their problem-solving process accurately. Furthermore, as Kim (2002) contends, if there are differences between Westerners and Easterners in their beliefs regarding the importance of explicit verbal representation of thoughts, which affects how they think and talk, Japanese students may struggle with this dichotomy. They grow up in a culture where “silence and introspection are considered beneficial for high levels of thinking” (p. 829), but they are now studying in the context where talking and thinking is considered virtually equivalent. In that instance, it may also be the case that students thought deeply about their learning process but were not able to express their thoughts lucidly due to the possibility they have not had such opportunities in their previous education. Considering all these factors, how valid could it be to evaluate students’ problem-solving skills based on what they write in the module packs or say in advising sessions?

On the other hand, since our aim is to help students develop their skills for self-direction, which by definition involves a great deal of thinking, whether the aim is achieved or not through the modules would be difficult to judge without evaluating students’ thinking process as well. Knowles’ (1975) definition of self-directed learning is “a process in which individuals take the initiative, with or without the help of others, in diagnosing their needs, formulating learning goals, identifying human and material resources for learning, choosing and implementing appropriate learning strategies, and evaluating learning outcomes” (1975, p. 18). It is apparent that self-direction can be achieved only through sophisticated cognitive processes and therefore, we should be able to assess such processes to validate what our SDL modules accomplish in aiding students with their SDL skills development.

Challenges to Overcome

One of the remedial solutions for such an issue is to clearly depict the characteristics of unobservable elements represented in students’ artifacts that should be interpreted and evaluated. However, these characteristics are often difficult to formulate and therefore are not always explicitly stated. Schraw (2000) listed three possible factors for the lack of such tangible outcome measurements for metacognition. He maintains that the tendency for people to assess metacognitive growth rather intuitively without using concrete measures is “due to at least three factors: (a) uncertainty about what to look for; (b) the lack of meaningful, cost-efficient measurement strategies; and (c) the lack of meaningful interpretative guidelines (p. 312).” These three issues raised by Schraw (2000) resonated with the concerns we had, which lead us to create some of our own principles for assessment regarding transparency and workload. Therefore, our aim in creating an assessment band for the newly designed curriculum was to overcome the issues with meaningfulness, transparency and practicality as much as possible. Admittedly, it is impossible to create a perfect assessment instrument for unobservable aspects of SDL skills. Even though we were aware of that fact, we went on to tackle this intricate task, believing that we would be able to establish an assessment band that will serve better than having no assessment guidelines at all.

The process of constructing an assessment band will be described later in this paper. However, let us first look at what kind of assessment bands were used in the previous SDL modules at the university.

How Previous Modules were Graded

In the SALC, we had two modules called First Steps Module (FSM) and Learning How to Learn (LHL) (see Noguchi & McCarthy (2009) for detailed accounts of the modules) and we had grading bands for each of the modules, which were referred to as a guideline for grading students’ work at the end of the module. The evaluation process based on these grading bands can be characterized as a summative criterion-referenced assessment since students’ performance is evaluated at the end of the semester according to a set of criteria.

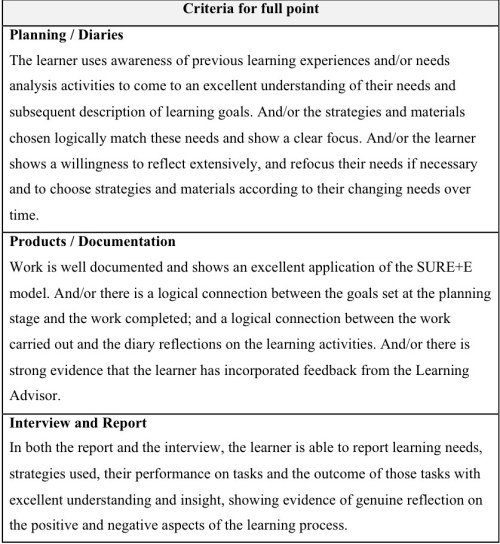

Assessment band for FSM

Figure 1. The Grading Band for the First Steps Module

The grading band for FSM (Figure 1) is composed of three parts based on artifacts: 1) First Steps Activities, 2) Reflections and 3) Learning Plan. For the First Steps Activities, where students do a series of activities to understand SDL concepts such as goal-setting, the main focus was whether students completed the activities or not. On the other hand, in Reflections, where students answer some reflective questions to demonstrate whether they understand the concepts introduced in each unit as well as how they think they can apply them in their own learning, “the ability to reflect” was the primary concern. In Learning Plan, where students write their language goals and explain how they will achieve their goals, the key elements for evaluation comprised whether the plan is “completed” “relevant” and “well-documented.” Even though it seems the band tries to focus on whether evidence of thinking occurred, it does not evaluate the quality of that thinking.

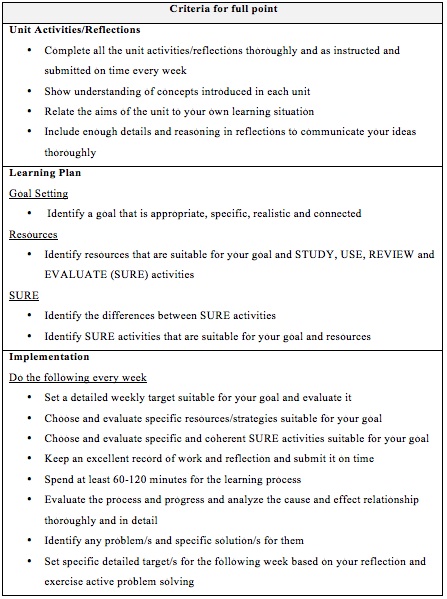

Assessment band for LHL

Figure 2. The Grading Band for Learning How to Learn

In the grading band for LHL (Figure 2), there are also three categories for each of the artifacts that are evaluated: 1) Planning / Diaries, 2) Products / Documentation and 3) Interview and Report. This band includes more phrases that demonstrate a clearer attention to the thinking process. For example, the band calls for learners to show “a willingness to reflect extensively” and that project work shows “a logical connection” between goal and work completed. Nonetheless, similar to the FSM, the wording of the band has ample room for various interpretations and needed more clarification of what features needed to be identified for evaluation.

Simply put, these two grading bands attempt to evaluate thinking skills when calculating student module grades. However, how exactly thinking or “reflection” is/should be assessed is not clearly illustrated in the bands and therefore needed to be elucidated.

Research on What Reflection Means

Instead of adapting an existing rubric for SDL skills or reflections, the new band was built based on our experiences and expertise in order to create a grading band with meaningful and limpid descriptors designed to suit our context.

While the previous grading bands have some room for different interpretations, there seems to be a great sense of conformity in LAs’ grading as a team. One of the reasons for this observation derives from the fact that all LAs undergo a norming session prior to grading each semester, and there is not much discrepancy in LAs’ grades when they are assigned to rate the same sample work of a student. There is also a record of final evaluative comments that the previous LAs have given to students, which also have a great level of coherency between them in terms of what they mention. These comments are referred to by all new LAs, which might be a factor for enhancing the unified understanding of our grading mechanism for the modules.

This observed consistency in grading approach in the LA team leads to the presumption that LAs have formed shared internal grading criteria that are not explicitly stated in the bands. In order to test this hypothesis and comprehend more deeply how LAs are interpreting the vaguely defined terms to describe unobservable elements of SDL in the grading bands and applying them during grading, a research project was conducted with the hope that the results would form the foundation of the new grading band. The research employed a think-aloud protocol while LAs rated two modules and utilized follow-up interviews to elicit qualitative data with elaborated information of LAs’ internal and even subconscious evaluation process and relevant beliefs. Since the word “reflect(ion)” seems to be the most prominent word that is incorporated in the grading bands to indicate that some form of thinking is being evaluated in students’ module work, research was carried out with the focus on examination of how each LA interprets the word when it appears in the band with the following two research questions: 1) What aspects of students’ work in the modules are valued and assessed? and 2) What does “reflection” mean to the LAs?

Methodology

Eight LAs were individually recorded with an audio recorder as well as a video camera during the process of evaluating and deciding final grades for the submitted module work (FSM) of two of their students. The students were ones the LAs had worked together with the whole semester and were randomly chosen for the research. While grading, the LAs were asked to talk aloud about their thinking process. The think-aloud session was followed by an interview with the researcher, where clarification of the more specific rationale for the evaluation was elicited and how the grades the LAs gave in the grading process were decided. Furthermore, every LA was asked a series of questions (Appendix A) to elicit what they value when they grade students’ work and what they think the word “reflection” means. The gathered data was analyzed and repeatedly emerging keywords were categorized by common themes, which were identified using a grounded theory approach (Corbin & Strauss, 2008). The data from the think-aloud sessions was analyzed and made into a list of the criteria that LAs look for when grading learning modules (Appendix B). Also, responses for the question regarding the definition of “reflection” were analyzed and the most frequently emerging key words were identified (Appendix C). The list of criteria and identified key words mentioned above were incorporated in the descriptors of the new grading bands as much as possible.

Results

The preliminary results revealed that the majority of the LAs consider that one of the definitions of “reflection” is the combination of evaluation and planning as part of the cycle of self-directed learning, which is represented as a conceptual framework below (Figure 3). Also, there were three elements in students’ reflections that are explicitly recognized as significantly valuable by a majority of LAs: ‘goal-orientedness’, actions for improvement, and analytical skills.

Figure 3. Cycle of Self-Directed Learning Process (adapted from Ambrose, Bridges, DiPietro, Lovett, & Norman, 2010)

Goal-orientedness. Greenstein (2012) contents that metacognition, which is one of the key elements in SDL skills, is “purposeful, targeted, and goal oriented” (p. 85). Aligning with that contention, this research shows that ‘goal-orientedness’ is emphasized by LAs when evaluating students’ SDL skills.

Goal-orientedness refers to the degree to which students focus on achieving their goals. In evaluating students’ goal-oriented attitudes, whether students are able to achieve their goals or not, i.e. make linguistic gains, is not directly considered as a sign of how goal-oriented they are. Instead, whether students can stay aware of their goals and design and conduct their self-directed learning while constantly keeping the goals in mind is taken into account.

This goal-oriented element is evaluated at two stages in the self-directed learning cycle: planning and evaluating. In the planning stage, students’ ability to identify specific language goals and create a detailed plan of what kind of resources will be used and how they are going to be used is graded favorably. Also, in the evaluation stage after the implementation of the plan, LAs placed a high value on students’ competence in evaluating their learning experiences from the standpoint of whether or not they were able to effectively achieve their goals. Furthermore, those learning plans that took into account students’ personal preferences, such as preferred learning styles, and personal limitations such as time constraints, while still matching the stated goals were valued more highly. However, personal preferences are only valued so long as they aid students with achieving their goals: it would not be useful if they chose something that they like but which does not help with their goals. For instance, in the think-aloud sessions, one of the LAs mentioned that her student, whose primary goal was to improve her conversational speaking skills and related grammar skills, chose a song of her favorite artist as her learning resource. The advisor indicated her concern that even though the student selected the activity that she enjoys doing, it does not necessarily help her improve her conversation skills in the most effective way, especially in terms of opportunities for output. Overall, the capability to judge if something is effective and suitable in assisting with goal attainment seemed to be conceived as fundamentally crucial.

Action plans for improvement

1. Enhancing strength

With relation to goal-orientedness, great emphasis is given to concrete action plans for improvement in order to achieve goals. Improvement can be made in two ways: enhancing strengths and reducing weaknesses. When evaluating students’ learning process, it is desirable that students will thoroughly analyze the strengths and weaknesses in their learning process in different areas. Once they recognize what went well, they can examine cause and effect relationships and detect any patterns that can be applied to their future learning. For example, in a hypothetical scenario, suppose one student has a conversation in English with exchange students and realizes that she had more fun than usual since she is usually nervous and worries too much about making mistakes. In her reflection, she attributes the difference to the topic of the conversation since they were talking about the kinds of things she is familiar with such as her favorite artists and Japanese culture. In this kind of situation, if she then decided in the future to bring up some topics that she is familiar with in conversation or familiarize herself with the topics that recur in the conversations, it can be said that she successfully optimized the positive side of her past experience and created ideas for future actions.

2. Overcoming weakness by problem-solving

In addition to recognizing the positive side of their learning experiences, students can also try to solve learning problems. In this case, students are expected to analyze the problem in detail and locate the causes. Upon examining the factors, they are then supposed to consider some possible solutions, which form a more concrete action plan for the next learning activities. For instance, suppose the same hypothetical student from above finds that she is too busy and has little time for her English learning activities. After reflecting on the issue, she comes to the conclusion that she tends to underestimate the time needed for the tasks and/or overestimate her time available and tries to finish the things that have less priority than her English studies. She therefore determines to cut her working hours in her part-time job and reduce time spent visiting the club members at her alma mater, which she felt obliged to do. In this case, she demonstrates good problem-solving skills that resulted in a specific action plan.

Analytical skills. In addition to the emphasis on the action plans that are produced from the evaluation procedure, what turns out to be crucial in the process of making efforts for improvement are analytical skills, specifically the ability to examine what happened in their learning process in detail and discern the cause and effect relationship among various elements involved in the process. All the LAs without fail mentioned during their think-aloud session key words such as “details” and “reason(ing),” indicating that these are significant aspects that influence their judgment about students’ reflections. These two key words overlap with two of the cognitive strategies for self-regulated learning process categorized by Oxford, namely “reasoning” and “conceptualizing with details” (2011, p. 16).

New Curriculum Grading Band

Changes in new curriculum grading band

The prototype of the grading band for the new curriculum (Figure 4) is currently in the process of development. In accordance with Moon (2002), it is ideal that our learning outcomes and assessment criteria are closely interrelated in the sense that general statements of learning outcomes are clarified with a more clearly elaborated assessment rubric. In order to accord with the ideal process of curriculum designed suggested by Moon, the grading bands were designed to align with and elaborate the newly created learning outcomes and principles (Lammons, 2013), which themselves are based on the recently completed needs analysis research and the newly designed curriculum analysis (Takahashi et al., 2013). In addition, the new grading band takes the research results mentioned in the last section into consideration and is composed of a series of interpretable actions that delineate features of reflection valued by LAs.

One of the features in the new grading band is its mention of problem-solving skills. The band calls for students to identify problems and specific solutions for them. For example, two of the descriptors state that students should be able to “identify any problems and think of specific solutions for them” and “set specific targets for next week based on the reflection and solution to the problems.” Another added change is an emphasis on detailed and analytical thinking. Students are expected to choose “specific” goals accompanied with relevant resources and strategies. Also, one of the descriptors tells students to “include enough details and reasoning in reflections to communicate your ideas thoroughly.” Furthermore, in order to follow one of our assessment principles that students should be informed of how they are assessed, the simplified version along with a Japanese translation was distributed to students in the beginning of the module so that they would clearly know what is expected from them in the module.

Figure 4. The Grading Band Prototype for the New Curriculum

Conclusion

Evaluating unobservable elements of SDL skills such as self-evaluation skills is one of the most challenging tasks in the effort to help students become self-directed learners. Even though it is not the case that our new assessment system has solved all the issues we are facing, we have settled on experimenting with a method of evaluating students’ thinking process by examining characteristics that we consider desirable prerequisites in self-directed learning, such as goal-orientedness, action plans for improvement and analytical skills. This process aligns with one of the basic assessment principles encouraged in Brookhart (2010), which emphasizes the importance of clarifying which characteristics of the thinking process should be taken into consideration.

While it would be an overstatement to say that we can evaluate students’ overall thinking skills by looking at only limited characteristics of their work, the assessment process might be more transparent and fair if we consistently appraise the same patterns of presented features in reflections. Consistency and transparency in assessment is one of the key elements that the LA team agreed on when we developed our principles (Lammons, 2013), and it is fair to say that the new grading band has increased the level of both consistency and transparency by revealing and elaborating what LAs look for. It is hoped that by connecting the assessment with learning outcomes and embracing the list of LAs’ internal criteria for evaluating the modules, the new band addresses all three of the issues raised by Schraw and Impara (2000), regarding lack of clear, meaningful and efficient assessment guidelines and tools.

Further modification to the band will be made based on feedback from LAs and students with the hope that the band will become one of the guidelines that lucidly indicates what we all are aiming for in our endeavor to foster self-directed learners.

Notes on the contributor

Junko Noguchi taught at a public high school in Chiba, Japan after getting her MA in TESOL and is currently working as a learning advisor at Kanda University of International Studies. Her research interests include phonology and self-directed learning.

Acknowledgements

At various stages, the following people have been key members of the project described in this case study: Akiyuki Sakai, Atsumi Yamaguchi, Brian R. Morrison, Diego Navarro, Elizabeth Lammons, Jo Mynard, Katherine Thornton, Keiko Takahashi, Neil Curry, Satoko Watkins, Tanya McCarthy, and Yuki Hasegawa.

References

Ambrose, S. A., Bridges, M. W., DiPietro, M., Lovett, M. C., & Norman, M. K. (2010). How learning works: Seven research-based principles for smart teaching. Jossey-Bass: San Francisco, CA.

Brookhart, S. M. (2010). How to assess higher-order thinking skills in your classroom. Alexandria, VA. ASCD.

Corbin, J., & Strauss, A. (2008). Basics of qualitative research: Grounded theory procedures and techniques (3rd ed.). Newbury Park, CA: Sage Publications.

Greenstein (2012) Assessing 21st century skills: A guide to evaluating mastery and authentic learning. Thousand Oaks, CA: Corwin.

Kim, H. S. (2002). We talk, therefore we think?: A cultural analysis of the effect of talking on thinking. Journal of Personality and Social Psychology, 83(4), 828–42. doi: 10.1037//0022-3514.83.4.828

Knowles, M. S. (1975). Self-directed learning: A guide for learners and teachers. Englewood Cliffs, NJ: Cambridge Adult Education.

Lammons, E. (2013). Principles: Establishing the foundation for a self-access curriculum. Studies in Self-Access Learning Journal, 4(4), 353-366. Retrieved from sisaljournal.org/archives/dec13/lammons/

Moon, J. (2002). The module and programme development handbook. London, UK: Kogan Page Limited.

Nation, I.S.P. & Macalister, J. (2010). Language Curriculum Design. London, UK: Routledge.

Noguchi, J., & McCarthy, T. (2010). Reflective Self-study: Fostering Learner Autonomy. In A. M. Stoke (Ed.), JALT2009 Conference Proceedings. Tokyo, Japan: JALT. Retrieved from http://jalt-publications.org/archive/proceedings/2009/E051.pdf

Schraw, G. (2000). Assessing metacognition: Implications of the Buros symposium. Issues in the measurement of metacognition. In G. Schraw & J. C. Impara (Eds.), Issues in the measurement of metacognition (pp. 297–321). Buros, NE: Buros Institute of Mental Measurements.

Takahashi, K., Mynard, J., Noguchi, J., Sakai, A., Thornton, K., & Yamaguchi, A. (2013). Needs analysis: Investigating students’ self-directed learning needs using multiple data sources. Studies in Self-Access Learning Journal, 4(3), 208-218. Retrieved from https://sisaljournal.org/archives/sep13/takahashi_et_al

Thornton, K. (2013). A framework for curriculum reform: Re-designing a curriculum for self-directed language learning. Studies in Self-Access Learning Journal, 4(2), 142-153. Retrieved from https://sisaljournal.org/archives/june13/thornton/

Watkins, S., Curry, N., & Mynard, J. (2014). Piloting and evaluating a redesigned self-directed learning curriculum. Studies in Self-Access Learning Journal, 5(1), 58-78. Retrieved from https://sisaljournal.org/archives/mar14/watkins_curry_mynard/

Appendices

Appendix A

Interview questions used to clarify LA’s internal criteria grading LHL

(1) What do you value when evaluating the weekly journals?

(2) What do you value when evaluating the student’s work?

(3) What do you value when evaluating the interviews and final reports?

(4) What do you think “reflection” in our SDL modules means?

Appendix B

List of LA’s elicited internal criteria for grading FSM

A. Task Completion

Do tasks completely and properly as instructed.

B. Goal oriented learning with SURE+E model

- Understand that small goals are small steps to achieve a Big goal

- Understand the concept of General skills and Specific skills. Understand we can divide language skills into 2 groups. The first group is called General Skills. General Skills are the 4 main language skills of Speaking, Listening, Reading and Writing. The second group is called Specific Skills. Specific Skills are the 3 main language skills of Vocabulary, Grammar and Pronunciation.

- Choose one skill from each of General skills and Specific skills as their goals. The chosen Specific Skill should be the one that will help improve the General Skills.

- Be able to give details about the chosen skills they want to improve

- Be able to state the reasons of why they chose the skills as their goals

- Be able to choose resources, activities and strategies that help them improve the chosen skills

- Be able to choose resources, activities and strategies that are possibly connected to their interests

- Be able to state the reasons for the choices of their resources, activities and strategies; why they are useful for their goals

- Understand SURE+E (Study it, Use it, Review it, and Enjoy it PLUS Evaluate it) model

- Understand what each of the elements of SURE+E model mean:

a. Study: Choose something to focus on and learn something new.

b. Use: Practice what you studied in a different setting. For example, you could try using new phrases you have learnt in a conversation.

c. Review: From time to time look back on what you have studied so you don’t forget it.

d. Enjoy: Put yourself in an enjoyable English environment. For example, read stories, watch movies, listen to music, send emails and chat to friends in English.

e. Evaluate: Check your progress once in a while to see if you are achieving your Big and Small goals. Compare your level at the beginning of your plan with the level you achieve after you study for a while

i. Have two records and compare them to see if they improved or not

ii. Right amount of frequency

iii. Right amount of intervals

C. Reflection: Critical and logical thinking

- Write answers/reflections with enough quantity and details/examples

- Write personalized answers/reflections

- Write answers/reflections that shows deep thinking

- Write answers/reflections that includes good ideas

- Write rationale for their answers/reflections

D. Communication with LAs

- Reading LA’s comments

- Answer LA’s questions if any

- Ask questions if needed

- Implement LA’s advice if necessary

Appendix C

Emerging key words from LA interviews on definition of “reflection”

- Reasoning / Logical connection

- Details (not descriptive) / Specific information

- Noticing/ Awareness / Discovery / Realization

- Specific and coherent goal

- Balance of learning

- Thinking back and forward:

- What went well? / What didn’t go well? (evaluation)

- Why?

- What action should be taken based on the observation? (planning)